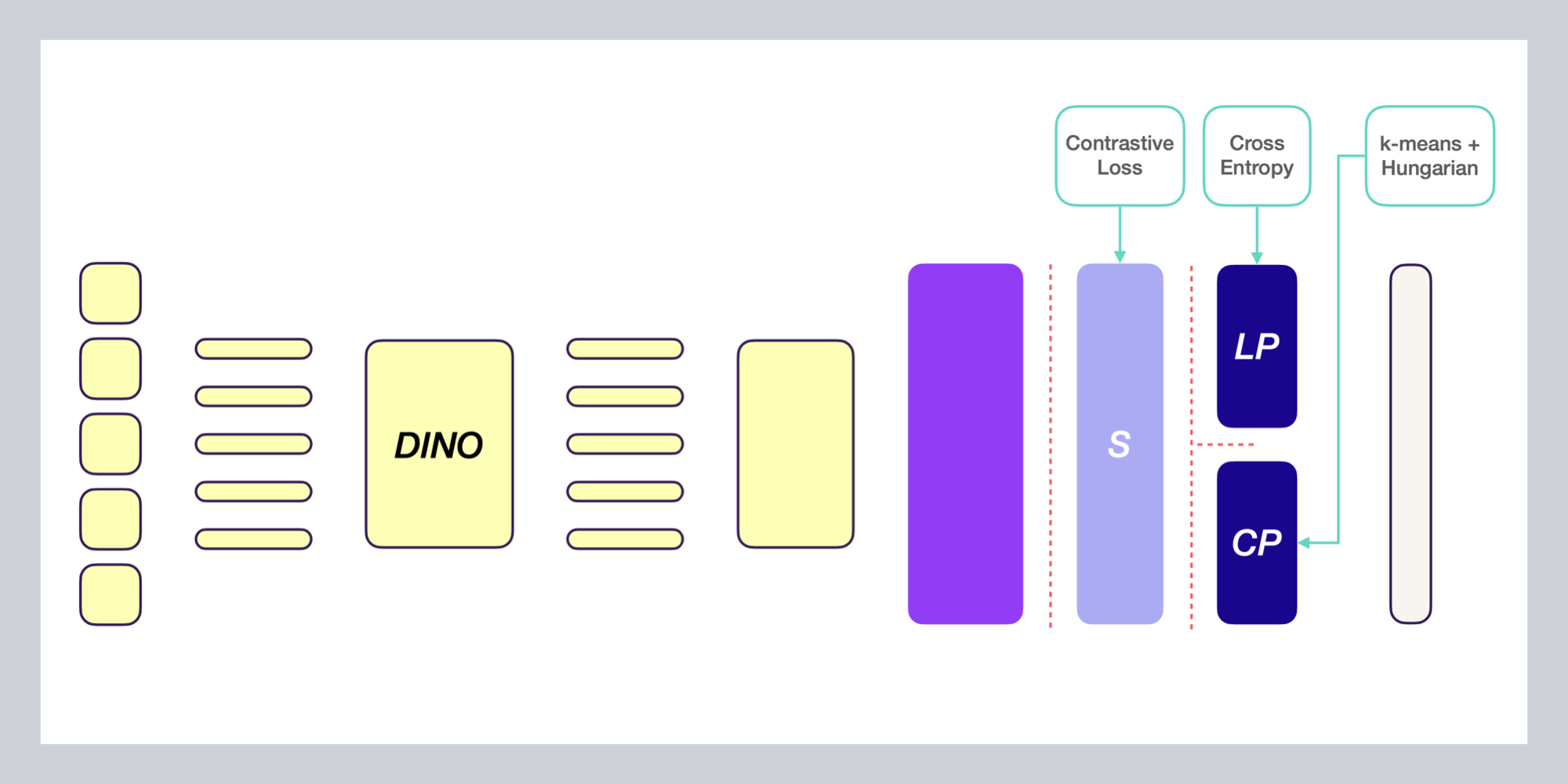

At Merantix Momentum, I work as a project lead for our contributions towards the safe.trAIn research project spearheaded by Siemens. Within the project, I investigate reliable and label-efficient computer vision algorithms. We published a follow-up study on STEGO, a self-supervised semantic segmentation method, in the SAIAD Workshop at CVPR 2023 in Vancouver. — with Dr. Schambach and Dr. Otterbach.

The Merantix Momentum Research Insights blog post series is a platform for our researchers to disseminate knowledge, advertising their own research results or meta-analyses like literature reviews. I've published two blog posts, one on self-supervised depth estimation and one on label-efficient semantic segmentation. — with Dr. Otterbach.

I am the host of the monthly public paper discussion group at the AI Campus Berlin. With this event, we hold space for the Berlin ML community, scientific exchange, and education. We host a colorful mix of speakers, ranging from in-house engineers and researchers to external speakers. Hit me up if you're interested in speaking. — with Dr. Otterbach.

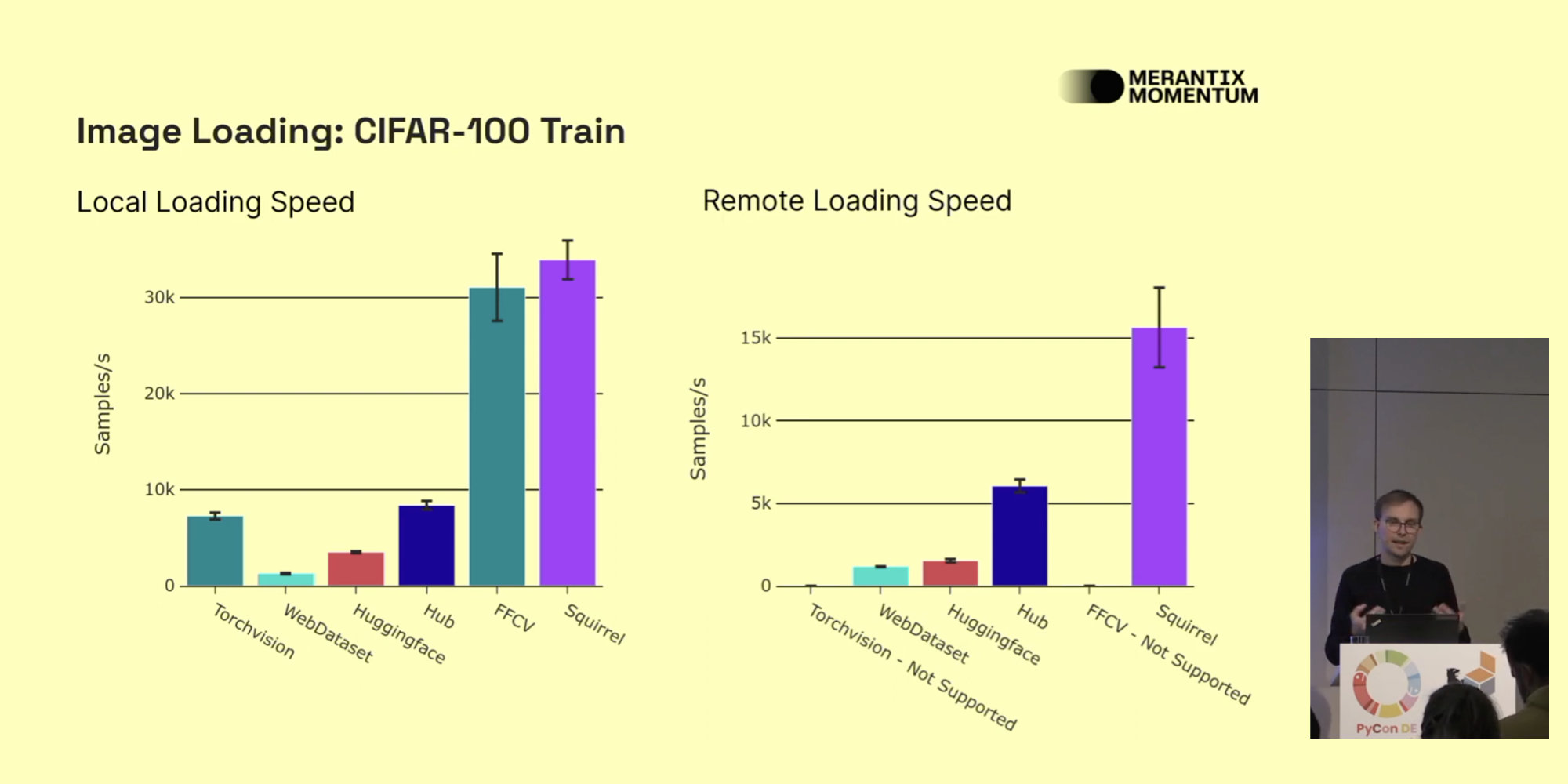

At Merantix Momentum, I'm benchmarking our data loading tool Squirrel. Preliminary results show that data loading pipelines can benefit greatly from Squirrel's improved throughput. Upon open-sourcing Squirrel, our VP of Engineering, Dr. Thomas Wollmann, showcased some of our initial results at the PyData 2022 conference in Berlin. I'm also an active code contributor to Squirrel. If you have feedback, let's chat! — with Dr. Wollmann.

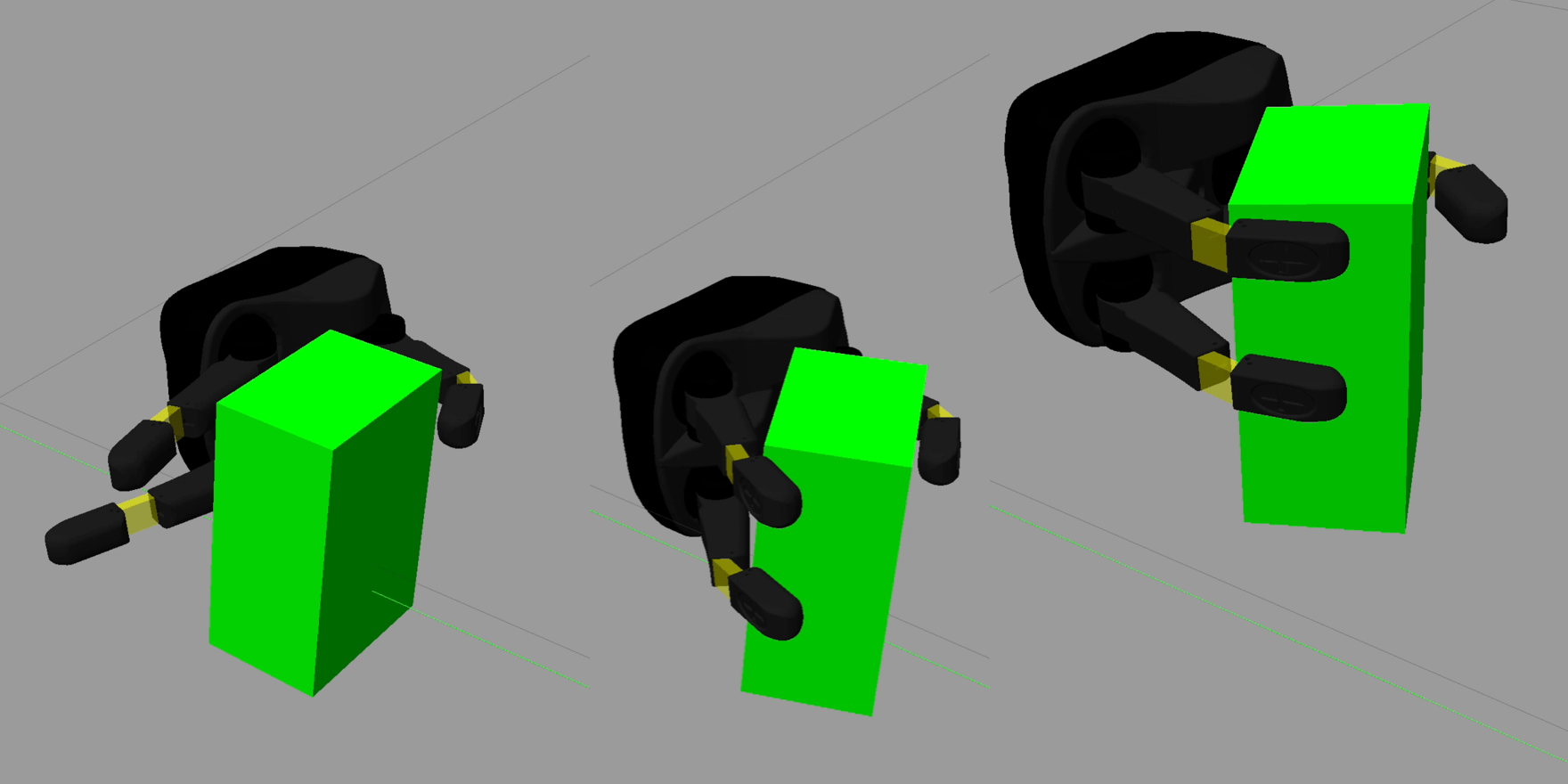

For my master thesis in the Harvard Biorobotics Lab, I investigated the role of tactile sensing in learning and deploying robotic grasping controllers. I'm greatful that this research effort was sponsored through a full scholarship by the German Academic Exchange Service (DAAD) and by funding of the US National Science Foundation (NSF). Our work first got published in the Workshop on Reinforcement Learning for Contact-Rich Manipulation at ICRA 2022. An extended version was later accepted into IROS 2022 as a full paper. — with Prof. Howe, Prof. Janson, and Prof. Menze

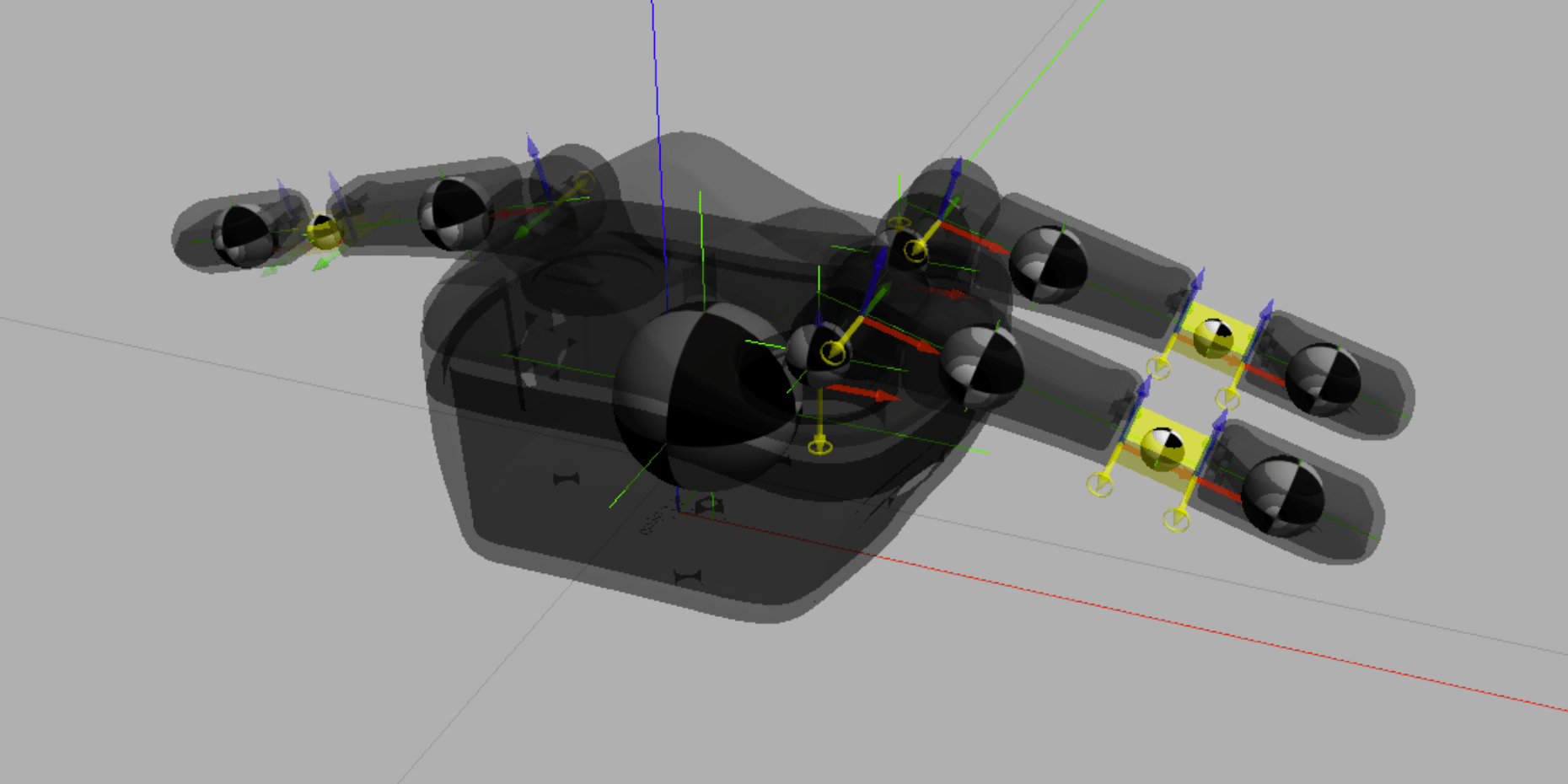

In preparation for my research project on robotic grasp refinement, I created a simulator for a robotic hand using Gazebo, C++, and the Robot Operating System (ROS). This open-source simulation stack also calculates various metrics that are useful for grasp analysis. The package is available as a pre-built Docker container. — with Prof. Howe

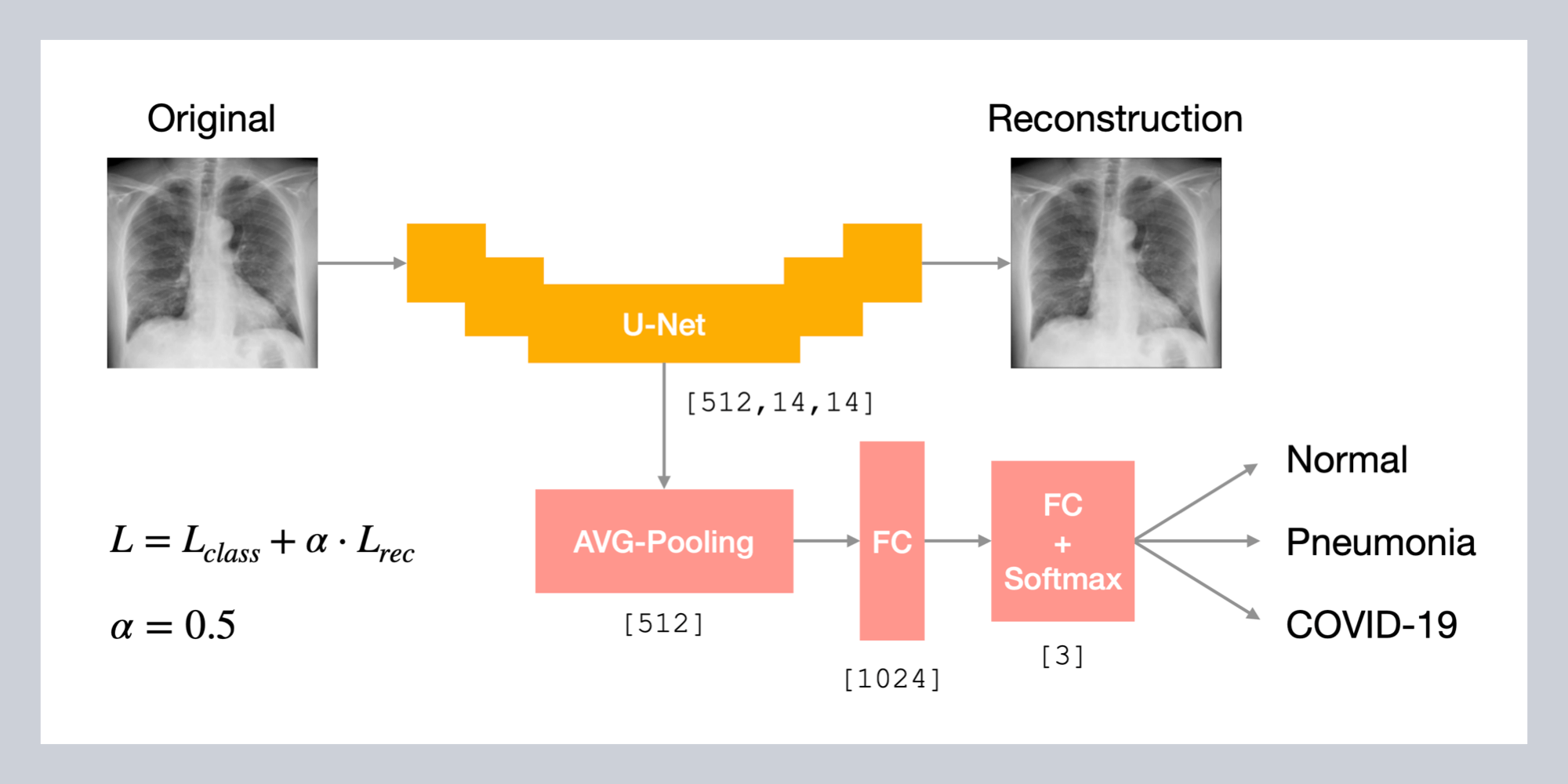

I worked on COVID-19 detection from chest radiographs as part of the Deep Learning in Medical Imaging course at the Department of Biomedical Engineering at Tel Aviv University. We compare three approaches: (1) transfer learning with a pre-trained network, (2) anomaly detection using an autoencoder trained on healthy lung images, and (3) multi-task learning of image classification and reconstruction. — with Prof. Greenspan

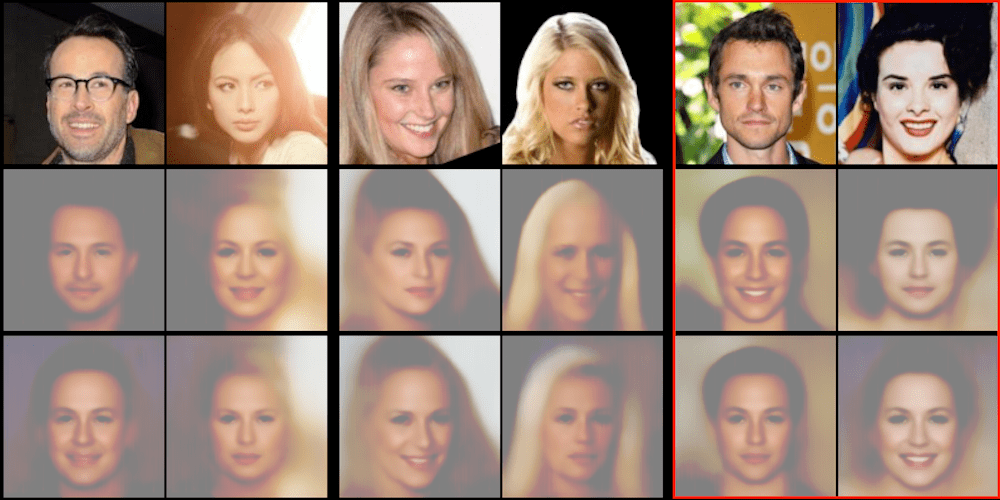

I took part in the Machine Learning in Computer Graphics practical offered by the Blavatnik School of Computer Science at Tel Aviv University. In a small team, we developed a new method for self-supervised class and content disentanglement. — with Prof. Cohen-Or

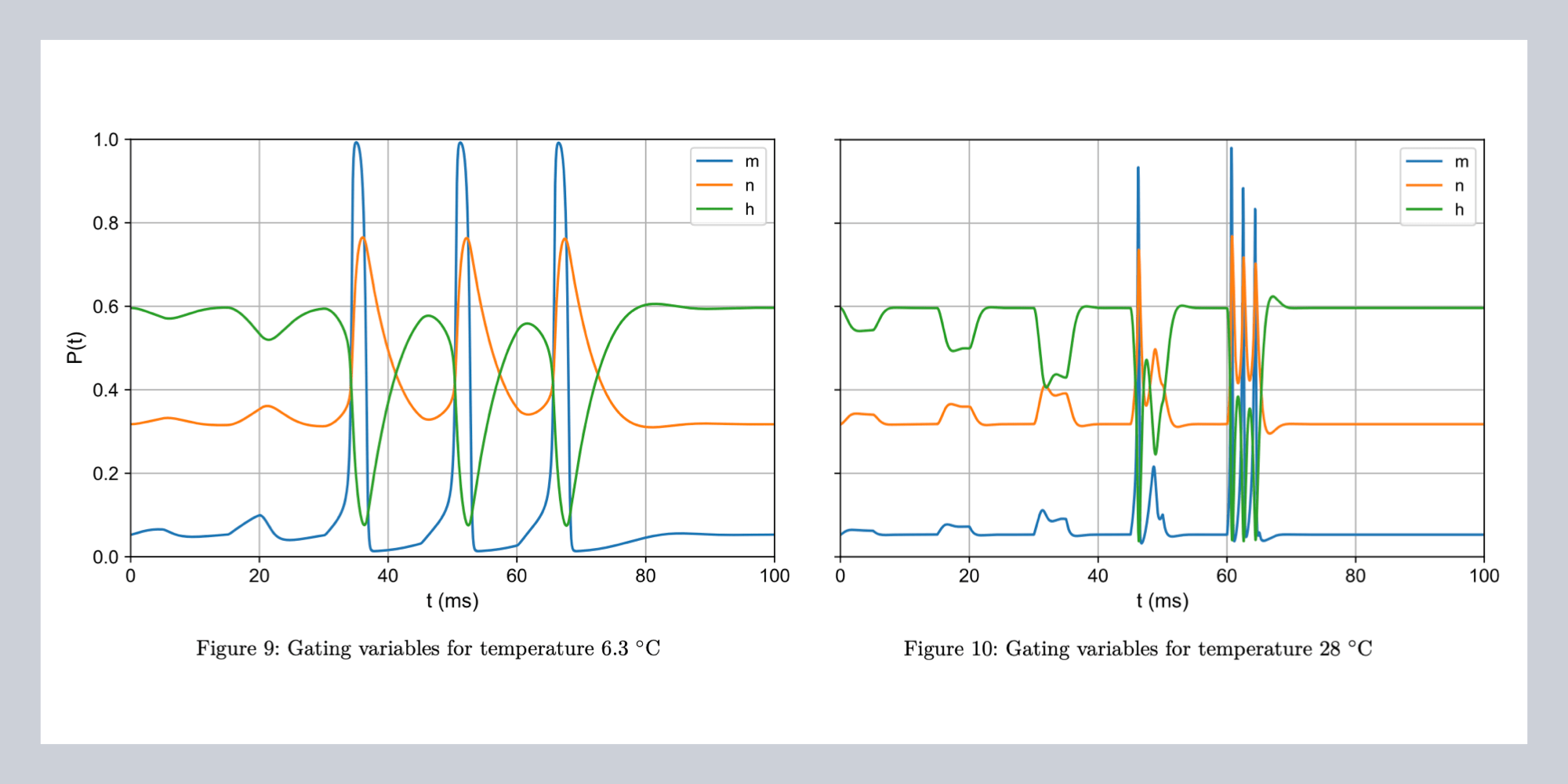

I participated in a course on neuroprosthetics at the TUM Chair for Bio-inspired Information Processing. In the practical part, I coded up the infamous Hodgkin-Huxley model and simulated neuronal behavior with different electrical stimuli. Further, I implemented basic encoding strategies for cochlear implants and a noise vocoder to study the signals as perceived by the patient. — with Prof. Hemmert

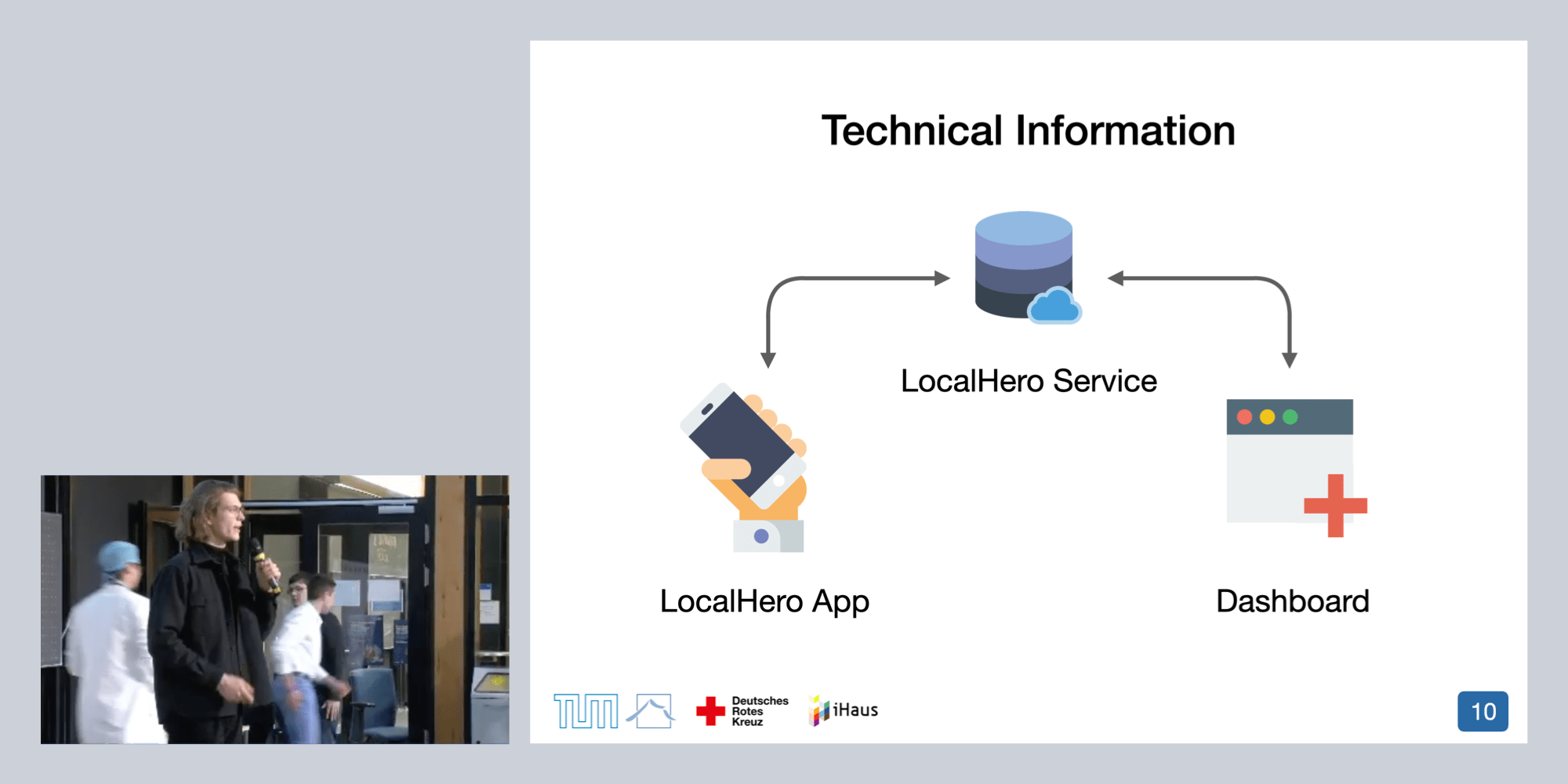

In my master's, I took part in TUM's software-engineering practical iPraktikum. Our team built the community-based iPhone application LocalHero for the German Red Cross in cooperation with an industry partner. The system connects people in need of general assistance or with urgent medical emergencies with helping users around them. — with Prof. Brügge

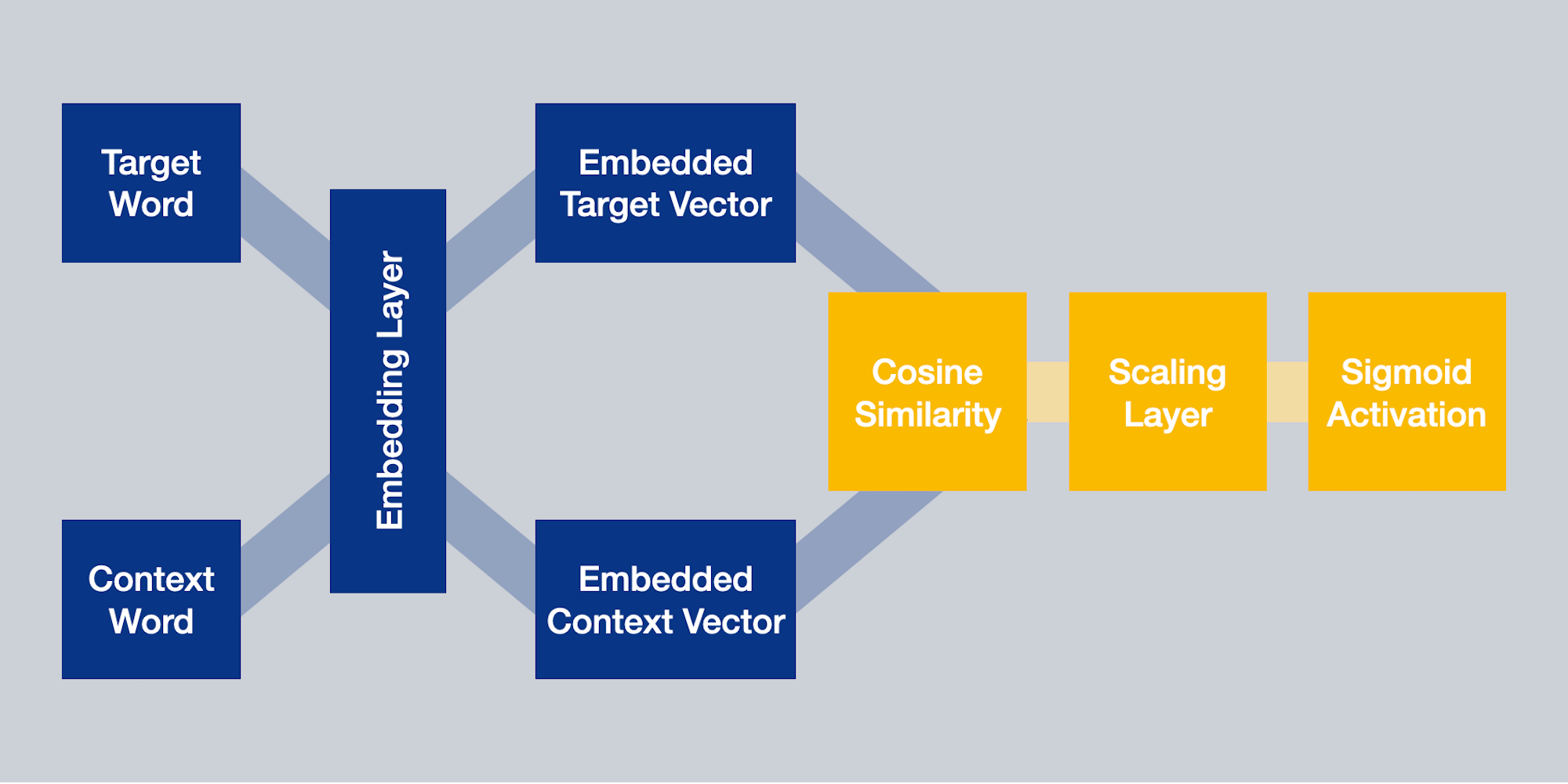

In the master's seminar Applied Deep Learning in Natural Language Processing at the chair for Political Data Science, I improved an existing approach to generate word embeddings. I used this system to compare gender bias in religious texts. — with Prof. Hegelich

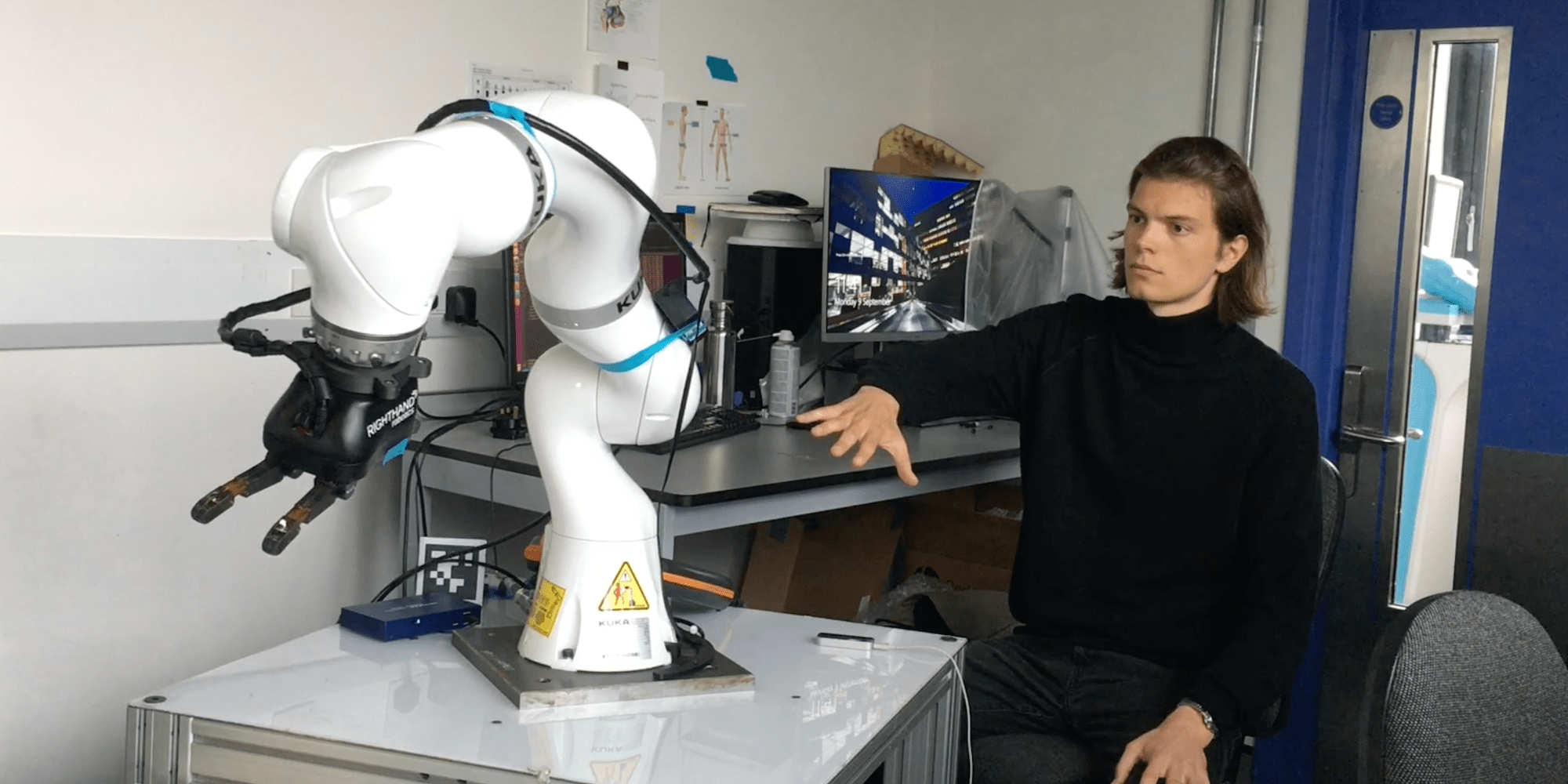

During my research internship at the Mechatronics in Medicine Lab at Imperial College London, I built a modular ROS platform to intuitively control a robotic rig with a gesture tracker. Visual feedback via a virtual reality headset allows for remote teleoperation of the robot. I also used data from an RGB-D camera to play with ideas regarding autonomous robotic grasping. The IEEE RO-MAN conference 2021 accepted our paper on the educational use of this platform. — with Dr. Secoli and Prof. Rodriguez y Baena

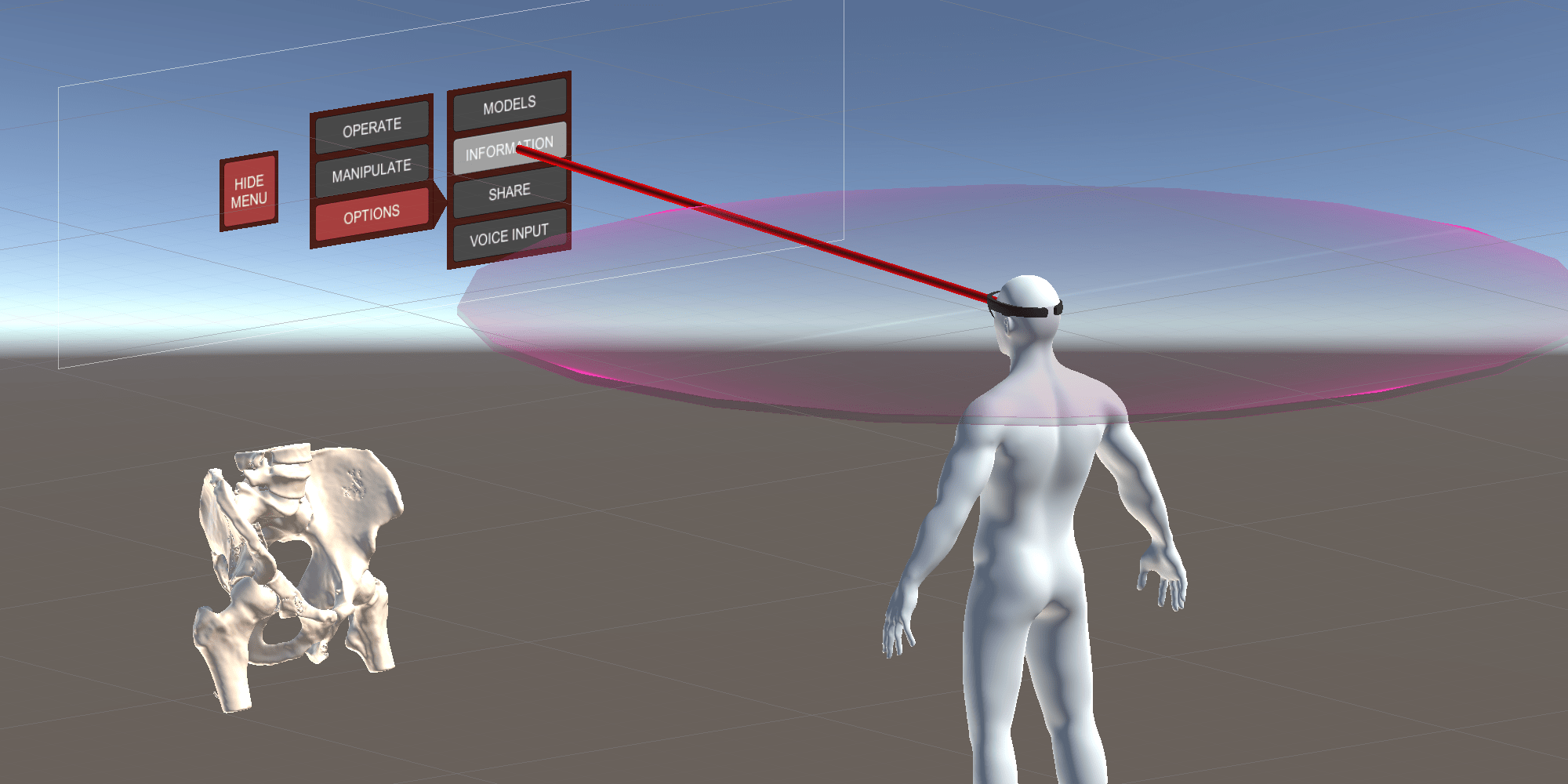

For my bachelor thesis at the NARVIS Lab, I developed an application for the Microsoft HoloLens to support orthopedic trauma surgeons with intra-operative 3D visualizations of complex bone fractures. I conducted a user study with four trauma surgeons to evaluate my work. — with Dr. Eck and Prof. Navab